This resource is provided by ACSA Partner4Purpose ThoughtExchange and was written by Sarah Mathias

Artificial Intelligence (AI) has disrupted how we work, live, and play over the past couple of years. And like it or loathe it, it’s here to stay. In K-12 education, the technology promises to enhance learning experiences and streamline teaching and administration. But, districts need to ensure responsible and ethical use.

Developing clear policies helps align AI use with district goals for student success. Engaging educational partners—students, staff, parents, and community members—is crucial for developing equitable AI policies.

AI technologies offer district leaders operational benefits, such as faster data analysis, improved decision-making, and time and cost savings. AI-powered engagement can facilitate efficient and inclusive district-wide conversations so districts can tap into diverse perspectives. Choosing responsible AI vendors is essential to protect student and staff privacy and ensure the ethical and accurate use of data to train AI.

This article explores tools and strategies to help leaders responsibly harness AI’s potential. By fostering an inclusive, ethical, and well-governed approach to AI integration, districts can enhance educational outcomes and prepare students for a successful future.

What’s AI, and how are K-12 districts using it?

Our education system’s all about human intelligence, so what role does artificial intelligence (AI) have to play?

Put simply, AI is “the science of making machines that can think like humans” (HCLTech, September 2021). AI technologies can process large quantities of data, quickly recognize patterns, and make decisions based on those patterns. Unlike humans, AI can do this in seconds.

Now, schools are grappling with the next iteration of AI. Generative AI tools go beyond recognizing data patterns to creating prompt-based content. Teachers and students use tools like ChatGPT, which creates text, and DALL-E, which creates images. Schools must provide guidelines on their responsible use in the classroom and district initiatives.

The state of AI in K-12- schools

- A 2024 EdWeek poll showed that 79% of surveyed educators “say their districts still do not have clear policies on the use of artificial intelligence tools.”

- 20% say their district prohibits students from using generative AI

- 7% say both staff and students are banned from using generative AI

- 78% say they don’t have the time or bandwidth to teach students about AI (Education Week, February 2024).

- ACT Research found that as of fall 2023, 46% of American students were using generative AI in their schoolwork (ACT, December 2023).

- A fall 2023 RAND study found that 18% of K–12 teachers reported using AI for teaching, and another 15% have tried AI at least once (RAND, April 2024).

“We’re not going to ban ChatGPT. We think that it’s a tool. We want to teach our kids how to use it ethically and responsibly and how to trust and not trust certain things that might come out of it.”

– Sallie Holloway, Director of Artificial Intelligence and Computer Science Gwinnett County Public Schools, Georgia

Instead of banning AI tech, K-12 districts need to embrace responsible use. This means developing guidance for using AI in schools, identifying where it can improve and streamline district initiatives, providing professional development for teachers, and using education technology that puts student and district data privacy first.

What is responsible AI?

The principles of responsible AI in education provide a framework to govern its usage in schools: fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability (Educators Technology, May 2024). Districts need to develop governance around AI use to ensure it adheres to the district’s student success goals.

Strategies for responsible AI integration

In “7 Strategies to Prepare Educators to Teach with AI,” EdWeek maintains that “educators should have a foundational knowledge of artificial intelligence, machine learning, and large language models (the technology behind ChatGPT and other chatbots)” before learning how to use AI to teach (Education Week, June 2023).

They recommend that districts integrate AI with these seven strategies:

- Develop a foundational understanding of AI, machine learning, and large language models

- Train in existing AI tools and technologies

- Engage with AI4K12.org’s 5 pillars for teaching AI literacy: perception, representation & reasoning, learning, natural interaction, and societal impact

- Demonstrate AI use in the classroom

- Incorporate AI into existing curriculum instead of an add-on course

- Integrate AI into the classroom through the lens of equity, cultural responsiveness, and ethics

- Incorporate AI literacy training into professional development programs

Educators need to understand that AI use is widespread—the technology is used daily on social media and in voice assistants. Schools can also integrate AI literacy and education into every subject area.

Here are some ways districts can promote AI literacy in their educational partners:

For the community

- District-led AI literacy workshops or town halls with parents/guardians

- Partnerships with higher education institutions that offer community workshops

- Emory University’s Center for AI Learning

- MIT’s K-12 AI Literacy project

- UC Berkeley’s free AI lectures

- Involve parents in educating their learners on AI technology use

- Internetmatters.org offers a free parents’ guide to AI

For administration & staff

- AI literacy professional development programs

- The International Society for Technology in Education (ISTE) offers AI PD certified by the U.S. Department of Education

- Training on federal/regional AI guidelines

- Artificial Intelligence and the Future of Teaching and Learning from the U.S. Dept. of Education

- Staff engagement in developing AI guidelines for teaching and learning

- AI-powered platforms can both demonstrate responsible AI technology and quickly gather a wide range of staff perspectives on proposed AI guideline

For the classroom

- Student engagement using responsible AI technology

- AI-powered platforms can demonstrate responsible AI technology through student engagement

- Online AI literacy classes for students

- Outschool.com leads online AI workshops for kids aged 7-18

- Partnerships with higher education institutions that offer classroom curricula

- MIT’s Impact.AI: K-12 AI Literacy project provides a suggested framework for middle school AI curricula to “empower students to become conscious consumers, ethical engineers, and informed advocates of AI”

Engaging educational partners in developing AI guidelines

To develop AI policies that serve all educational partners, districts need to engage all groups and consider different perspectives—not just ask the experts. Leaders should consult students, staff, parents, and community members on district policies or guidelines that impact them.

- Staff may be using AI technology in their lesson planning and classrooms—they’re the front line of the district

- Students will be using AI both inside and outside the classroom and will need AI skills for future careers.

- Parents and community members can be involved in how their learners are using AI in the classroom

Including different educational partners in the conversation about AI policies will help districts understand their perspectives and lessen their fears about this new technology.

Using an AI-powered platform for deeper engagement

A comprehensive AI engagement platform can speed up and streamline the consultation process, allowing districts to keep up with the pace of technology. These platforms can make the most of AI to offer districts the following:

- Multiple quantitative and qualitative engagement methods

- Anti-bias technology and multi-language participation for equitable engagement

- Instant in-depth analysis of large data sets

- Ability to pinpoint ideas with high alignment

- Ability to identify common ground in polarized groups

- Fast custom reporting to share results easily

- Top-notch data security and responsible AI governance

The right engagement platform, built with security and privacy at its core, builds trust with your educational partners. Demonstrating how AI can elevate their voices—instead of replacing them—will encourage people to embrace new AI software in your district.

Operational benefits for districts

Many of your educational partners might think of AI as a shortcut for students, but the technology provides educators with significant operational benefits. Here are a few ways AI technologies can transform how superintendents, communications professionals, administration, and teachers tackle their jobs.

Speed and efficiency: Use AI to complete lesson plans, meeting agendas, educational partner communications, data analysis, and reporting in seconds.

Cost and time savings: Leverage AI for routine administrative tasks and AI data analysis for more effective funding allocation. Engage with and gather data from large groups without the expense of town halls, meetings, and focus groups.

Improved record monitoring and targeting for interventions: Student counselors can use AI technology to monitor student records, focus on evaluating flagged students and help them graduate.

Ability to analyze larger data sets: Analyze large data sets, allowing districts to consider a broader range of data for making important decisions. Perform custom analysis in minutes and leverage the district’s data to suggest talking points, pinpoint actions, and provide summaries.

Better engagement: Unlike time-consuming surveys, focus groups, and town halls for engagement, AI engagement platforms speed up the time from ask to action, gathering large qualitative and quantitative data sets using lightning-speed analysis. They also provide participants with more innovative and engaging ways to give the district feedback.

DeKalb County School District leverages AI-powered engagement

Dr. Yolanda Williamson, DeKalb CSD’s Chief of Community Engagement, acknowledges her district’s changing demographics require new engagement strategies.

That’s why DeKalb used ThoughtExchange to develop the district’s strategic plan. This enabled DeKalb’s leadership to gather quality data from a broad range of community members to inform its focus areas—a process that could take months without the instant, in-depth analysis AI provides.

Dr. Williamson says, “Because of AI, it takes me 30 seconds to get a report and determine what’s next in terms of what we need to discuss and what actions we need to take to move forward.”

Implementing guidelines and regulations for AI use

Establish clear guidelines for safely and responsibly using AI in education. Ensure AI tools are used ethically, focusing on student privacy and responsible usage. By providing guidance, leaders can make sure that AI enhances students’ learning experiences without compromising safety or privacy.

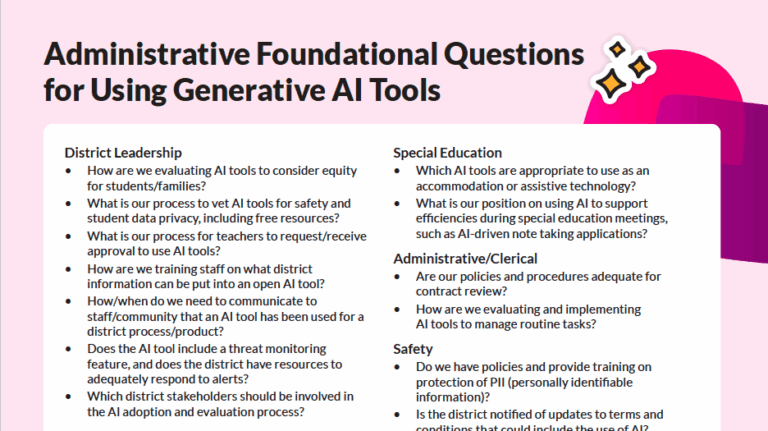

Developing your district’s AI policies

Although AI is new to most K-12 districts, many states, districts, and organizations have already developed AI policies and guidelines that can help districts as they develop their own.

Virtual Arkansas (2024), a supplemental online education platform that supports Arkansas school districts, recommends districts consider the following questions when developing their AI guidelines and regulations:

- How will AI integration align with the district’s educational goals and values?

- How will AI be used in the classroom, and how will it support student learning?

- What data will be collected, and how will it be used and protected?

- How will teachers be involved in AI integration, and what professional development will be provided?

- What privacy protocols are in place to protect student and staff data?

- How will the accuracy, reliability, and bias of AI output be assessed?

- How will stakeholders remain informed about the design and intended use of AI tools?

- What processes will be in place for stakeholders to demonstrate consent for use of these tools?

TIP: Use an AI experience and engagement platform specializing in qualitative data analysis to ask educational partners for their insights on the questions relevant to their experience in your district.

Choosing responsible AI vendors

As a K-12 district, you’re responsible for a vulnerable cohort: kids. That’s why you’ll want to choose AI technology that rigorously protects personal and academic data.

When looking for AI education technologies, consider the following questions:

- Do they have a responsible AI policy?

- Do they have an AI governance policy?

- Do they have policies to mitigate bias in AI?

- Do they have an AI accountability policy?

- Do they have goals and ethics around how their AI is used?

- Do they comply with best-in-class data security regulations and certifications?

- Do they encrypt and back up data?

- Do they have comprehensive cybersecurity measures in place?

- Do they guarantee they won’t sell your participants’ data?

Ensuring security

Cyber attacks can lead to unauthorized access, data loss, and significant operational disruptions. Making sure your chosen AI vendor has robust cybersecurity measures is crucial to preventing data breaches that could expose sensitive student and staff information for misuse and exploitation.

Developing your district’s AI policies

AI systems can quickly collect vast amounts of sensitive information, including names, addresses, birthdates, and academic records. This makes students vulnerable to identity theft and fraud, which can have severe consequences for them and their families.

Data breaches can have long-lasting effects on children’s safety and well-being. Your AI vendor should have clear policies around student privacy. This commitment is about nurturing a safe, supportive space where learning and collaboration can thrive.

Look for a platform that’s committed to upholding student privacy, including:

- No selling of student data — students’ information remains private and secure.

- No behavioral targeting — students won’t be subjected to ads or marketing based on their data.

- Educational use only — data is strictly used to enhance learning outcomes.

- Stringent security measures — robust protections ensure data is safe from security breaches.

- Full transparency — sharing clear policies and practices that build trust.

- Data access — parents, educators, and students can access their own data, ensuring openness and control.

Also, ensure AI vendors comply with the Family Educational Rights and Privacy Act (FERPA), the Protection of Pupil Rights Amendment (PPRA), and the Children’s Online Privacy Protection Act (COPPA), designed to protect the privacy of students’ educational records. Districts can avoid legal repercussions and maintain trust by ensuring AI systems adhere to these regulations.

Teaching the future

Integrating AI into K-12 education can enhance learning and administrative efficiency—as long as districts approach it with responsibility and ethical considerations. Districts can demonstrate responsible AI use by encouraging AI literacy among educational partners and using AI tech to streamline staff workflows and promote district objectives. But, selecting responsible AI vendors is paramount to protecting sensitive student and staff data.

Engaging all educational partners—students, staff, parents, and community members—is vital in developing AI guidelines that reflect their diverse perspectives and foster trust. AI-powered engagement platforms can streamline this process, offering fast, in-depth analysis and actionable insights that align with community priorities.

Ultimately, AI’s role in education should be to expand and support educators’ work, not replace it. By implementing robust guidelines, providing ongoing training, and promoting an inclusive environment, districts can harness AI’s power to prepare students for a future where AI literacy is crucial.

References

Articficial Intelligence, Defined in Simple Terms, HCLTech, Aruna Pattam, September 2021

https://www.hcltech.com/blogs/artificial-intelligence-defined-simple-terms

Schools are Taking Too Long to Craft AI Policy. Why That’s a Problem, Education Week, Alyson Klein, February 2024

https://www.edweek.org/technology/schools-are-taking-too-long-to-craft-ai-policy-why-thats-a-problem/2024/02#:~:text=That%20includes%20six%20states%E2%80%94California,organizations%20have%20also%20stepped%20up

Half of High School Students Already use AI Tools, ACT Newsroom and Blog, December 2023

https://leadershipblog.act.org/2023/12/students-ai-research.html

Using Artificial Intelligence Tools in K-12, RAND, Melissa Kay Diliberti, Heather L. Schwartz, Sy Doan, Anna Shapiro, Lydia R. Rainey, Robin J. Lake, April 2024

https://www.rand.org/pubs/research_reports/RRA956-21.html

The 6 Principles of Responsible AI in Education, Educators Technology, Med Kharbach, May 2024, https://www.educatorstechnology.com/2023/11/principles-of-responsible-ai-in-education.html

Virtual Arkansas, 2024

https://virtualarkansas.org/

7 Strategies to Prepare Educators to Teach with AI, Education Week, Lauraine Langreo, June 2023

https://www.edweek.org/teaching-learning/7-strategies-to-prepare-educators-to-teach-with-ai/2023/06

Sarah Mathias has a BA in Sociology and an MPC in International/Intercultural Communication. She mentored and taught students of all ages at home and abroad, and honed her corporate writing skills in the travel insurance and fashion industries before joining ThoughtExchange.